Why Updates are Important

Technology is a big part of everyone’s life, and it can be hard to keep up with all the changes. One way to make sure your business stays up-to-date is by updating your iOS devices regularly.

Businesses need to stay on top of what’s happening in their industry, which means staying current with the latest technology trends. For many companies that have an iPhone or iPad as their primary device, one way they can do this is by installing regular software updates from Apple to ensure they have access to all the newest features and security patches.

Updates are critical to maintaining the security and productivity of your devices. Updates occur for many reasons, including fixing issues (bugs) within the operating system or how the software works with other apps. A major reason for updates is to resolve vulnerabilities that can be maliciously exploited. Apple’s most recent update aimed to do just this.

iOS 14.8 Update

Apple has been urging customers to update their devices to iOS 14.8 due to a security threat in which malicious PDFs could infect devices with a spyware known as Pegasus. Apple believes the spyware has been infecting devices since last February, but the number of victims is low. If you believe your device has been infected, learn how to scan for Pegasus spyware on your phone.

iOS 15 Update

iOS 15 is the next update in the line up, just released September 20, 2021. While the update will come packed with cool new features, it also tackles privacy, which has some users concerned.

iOS 15 features a “privacy dashboard” that will make it easier for you to see what permissions (location, camera, microphone, etc.) you have allowed for apps on your iPhone. Apple continues it’s aim for transparency with a feature you can enable in Apple Mail that will hide your IP address and other data from marketers. For example, some marketing campaigns track when an email is opened, if links are followed or attachments are opened in an email. This new feature will restrict data like this from being shared.

Protection for Children CSAM Detection

Apple planned on extending privacy to children, with features such as Child Sexual Abuse Material (CSAM) detection, guidance in Search and Siri and communication safety in messages. As of September 3rd, Apple has postponed these updates after harsh backlash from users, but still plans on adding the features to later updates of iOS 15.

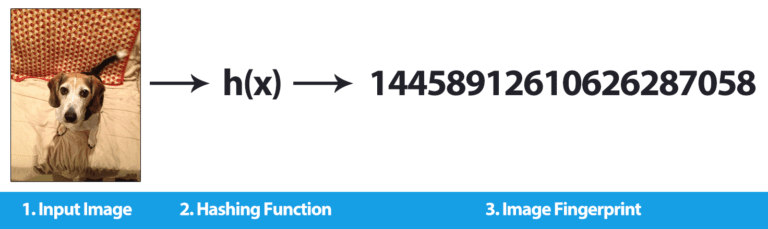

CSAM detection compares the fingerprints of images in iCloud with fingerprints of images in a database of known CSAM. Many users feel Apple was unclear regarding how detection of the images takes place without Apple scanning actual images in your iCloud.

Apple has stated the feature was designed with user privacy in mind, and has tried to explain that your photos are not at risk. Before images are uploaded to iCloud, each image is paired with an “image hash.” An image hash is a coded version of an image. Once an image is uploaded to iCloud, the image hash is compared to a database of known CSAM hashes.

Define Image Hashing

Source: pyimagesearch.com

Image hashing or perceptual hashing is the process of:

- Examining the contents of an image

- Constructing a hash value that uniquely identifies an input image based on the contents of an image

A visual example of what Apple could be using a perceptual hashing/image hashing algorithm can be seen at the top of this section.

This algorithm computes an image’s hash based on the object’s visual appearance. Images that appear perceptually similar should have hashes which are also similar, where “similar” is typically defined as Hamming distance between two values (usually represented by numbers).

By utilizing this algorithm where resemblance matters more than anything else — like facial recognition software– Apple can gain uniqueness from all images without having any one specific picture while still being able to recognize objects accurately even if many other pictures were taken nearby/uploaded at once.

If a certain number of these image hashes match with possible CSAM images, then action is taken. Neither Apple, nor other organizations, have access to your images or even image hashes. The image hashes were developed to protect your privacy, while also allowing authorities to work with the National Center of Missing and Exploited Children.

Conclusion

If your photo is not found on the list of hashes, then it will be safe and sound. Apple has developed a system that safeguards from potential exploitation by other organizations- even if they wanted to access them.

This means that CSAM images are less likely to end up in places like chat rooms or websites where children may come across them without their parents’ knowledge.

If you want to keep your device safe and productive, updates are essential. It is important for everyone who uses their devices on a regular basis to update as soon as they can so that vulnerabilities can be resolved before malicious actors take advantage of them. We hope this article answered some questions about how image hashing and iOS updates works, why it exists, what it does.